Our Blogs

- What is Snowflake?

- How to Use Snowflake data warehouse?

- Snowflake Integration with Talend

- Talend Snowflake Components

- Related ELT Components for Snowflake

- What is Snowflake?

Snowflake is analytic cloud data warehouse build on top of Amazon Web Services (AWS) cloud Infrastructure and is a true SaaS (Software as a Service) offering platform. There is no need to select, install, configure or manage any hardware. And also, there is no software you need to install, configure or manage. It cannot be run on any private cloud or hosted Infrastructure. All needed ongoing management, maintenance and tuning is handled by snowflake itself.

- Key Capabilities

- AWS and Azure Only (As on Date)

- No PK/FK Enforcement (Informational only)

- Snowflake SQL

- DDL/DML

- SQL Functions

- User Defined Functions/Stored Procedure (using java Script)

- Views/Materialized Views

- ACID Transactions

- Analytical Aggregations, Windowing and Hierarchical Queries

- Integration Supports

- Data integration Tools (Talend, Informatica, Pentaho etc.)

- Self-Services BI Tools (Tableau, QlikView, Spotfire etc.)

- Big Data Tools (Kafka, Spark, Databricks etc.)

- JDBC/ODBC Drivers

- Native Language Connectors (Python, Go, Node.jsetc.)

- SQL Interface & Client

- Snowflake web Interface

- Snowflake CLI

- DBeaver

- Unique Features

- Scalability (Storage and Compute)

- User Experience (Performance)

- No Indexing

- No Performance Tuning

- No Partitioning

- No Physical Storage Design

- Tunable pay per use

- How to use Snowflake data warehouse

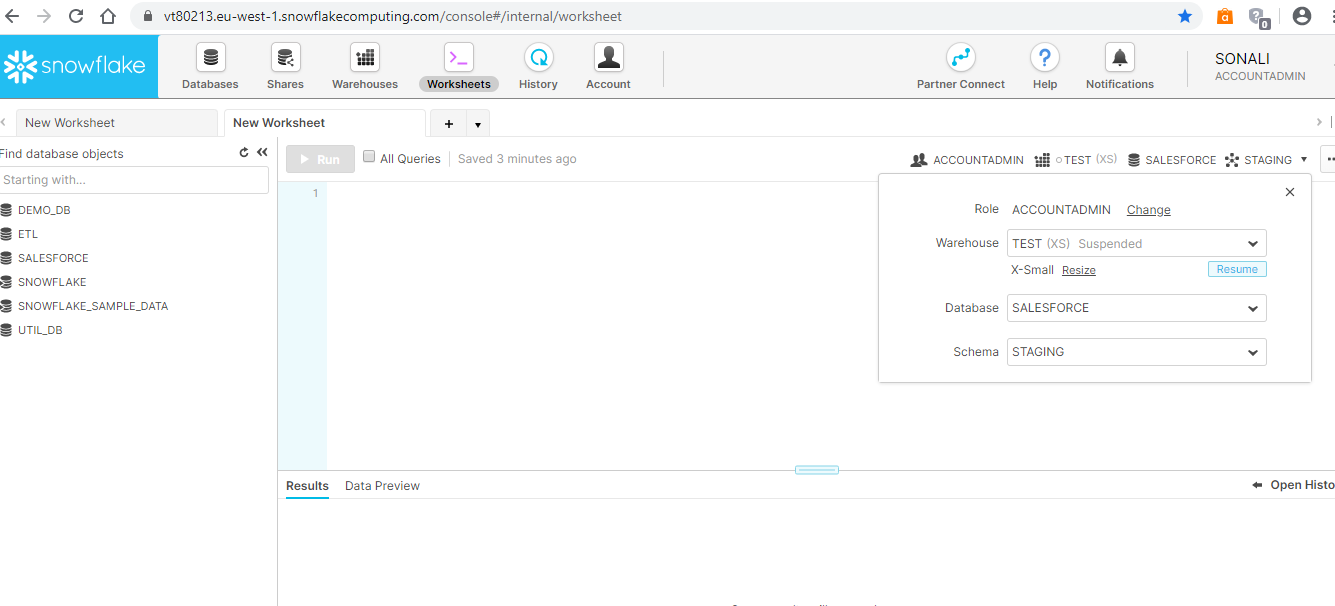

It is pretty easy to learn and handle snowflake. You can find open source 30 days trial version. Provide all the details required and do sign up for the first time. After login you can see the screen like this:

From the right-side menu, you can switch Role, Warehouse, Database and Schema. To create all these, you can run simple create SQL query on worksheet provided. There are few tabs you can see databases, Warehouse, Worksheet, History and Account on topmost menu. All created database you can see on database tab and simultaneously warehouse and history details you can find. If you interested in detailed information about snowflake architecture visit snowflake documentation here https://docs.snowflake.com/en/.

- Snowflake Integration with Talend

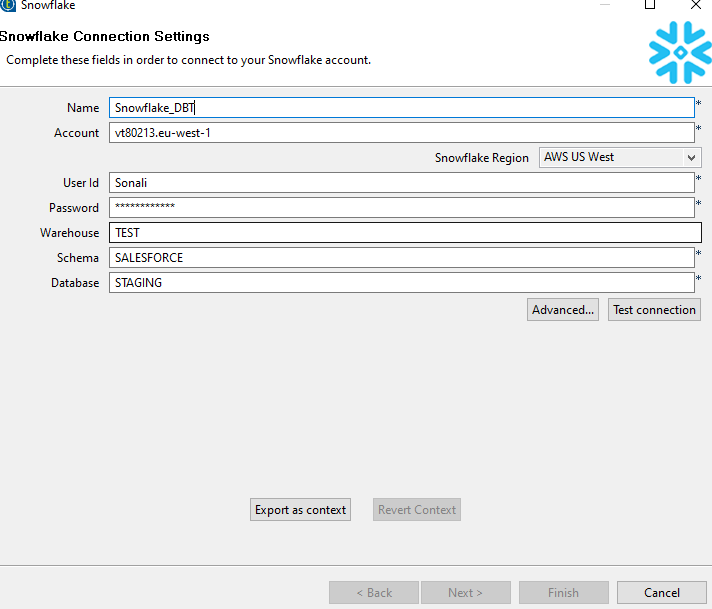

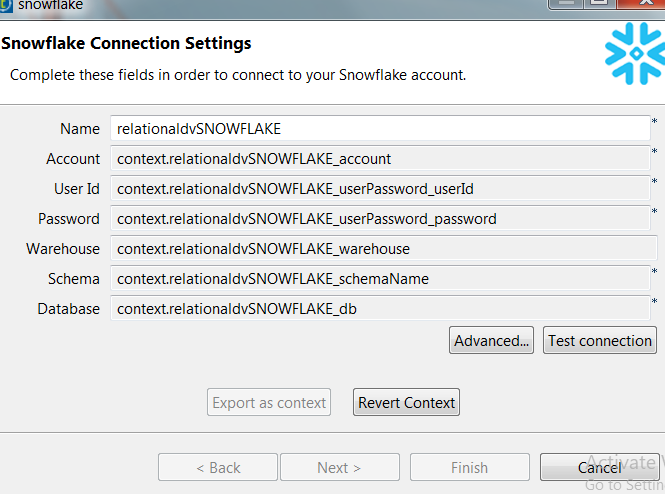

- Creating a metadata connection to snowflake

- Inside project repository find the Metadata section

- Locate the Snowflake icon

- Right click and select snowflake connection menu option

- Inside connection definition dialog box fill up the details like Name, Account, User ID, Password, Warehouse Name, Schema and database name

- Initially just fill the parameter details manually to establish a valid connection but best practice is to convert it into a context group with assigned variables of connection parameters

- In Advanced ensure that Login Timeout, Tracing and Role settings as per requirement

- Click ok then click Test connection to ensure connection validity

- Once made a valid connection click next

- Select the tables as per your requirement then click finish

- Talend Snowflake Components

- tSnowflakeConnection:

Use to manage a connection to Snowflake that can be reuse by another Snowflake components.

You can either drag from metadata snowflake connection and select tSnowflakeConnection component or type tSnowflakeConnection and configure all the properties

- Standared properties

- Basic Settings

|

Database |

Select the database list and apply accordingly |

|

Property type |

Select the way connection details build in or repository |

|

Account |

Enter provided account name within double quotes |

|

Snowflake Region |

Select AWS region or Azure region from dropdown list |

|

User Id and password |

Enter provided login name within double quotes and click […] button to enter password within double quotes |

|

Warehouse |

Enter Warehouse name within double quotes as per your project |

|

Schema |

Enter schema name to be used within double quotes |

|

Database |

Enter database name to be used within double quotes |

- Advance settings

|

Use custom snowflake Region |

To use customize snowflake region select the checkbox and provide region id as per region used within double quotes like eu-west-1 or east-us |

|

Login Timeout |

Specify login timeout to wait for response when connecting to snowflake before returning an error |

|

Role |

Provide the snowflake role you have assigned if empty it will take public role automatically granted |

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs |

- Usage

|

Usage Rule |

It creates a snowflake connection and works with other sub jobs that reuse this connection using trigger link |

- tSnowflakeInput:

Use to reads data from a snowflake table into the data flow of your job based on an SQL query.

- Standard properties

- Basic settings

|

Database |

Select the database list and apply accordingly |

|

Property type |

Select the way connection details build in or repository |

|

Connection Component |

Select the connection component and use existing connection |

|

Account |

Enter provided account name within double quotes |

|

User Id and password |

Enter provided login name within double quotes and click […] button to enter password within double quotes |

|

Warehouse |

Enter Warehouse name within double quotes as per your project |

|

Schema |

Enter schema name to be used within double quotes |

|

Database |

Enter database name to be used within double quotes |

|

Table |

Click […] button and in select snowflake table to be used |

|

Schema and Edit Schema |

create and store the schema locally for this component or use already created schema in the repository. You can reuse it in various projects and job designs. |

|

Manual query |

Select the checkbox and write proper SQL query within double quotes |

|

Condition |

Enter the Boolean expression to be used to filter data from your snowflake table within double quotes |

- Advance settings

|

Login Timeout |

Specify login timeout to wait for response when connecting to snowflake before returning an error |

|

Role |

Provide the snowflake role you have assigned if empty it will take public role automatically granted |

|

Tracing |

Use for taking log for snowflake JDBC driver |

|

Allow snowflake to convert column to uppercase |

Select checkbox to convert lowercase to uppercase in the defined table name. This property will not available if you select Manual query check box

|

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs |

|

NB_LINE |

Provide the number of rows proceed and returns integers |

- Usage

|

Usage Rule |

This component sends data to other components via row link |

- tSnowflakeOutput:

Use to insert, update, upsert or delete data in a output snowflake table incoming from its preceding component. It uses the bulk loader for high performance database operations provided by snowflake.

- Standard properties

- Basic settings

|

Database |

Select the database list and apply accordingly |

|

Property type |

Select the way connection details build in or repository |

|

Connection Component |

Select the connection component and use existing connection |

|

Account |

Enter provided account name within double quotes |

|

User Id and password |

Enter provided login name within double quotes and click […] button to enter password within double quotes |

|

Warehouse |

Enter Warehouse name within double quotes as per your project |

|

Schema |

Enter schema name to be used within double quotes |

|

Database |

Enter database name to be used within double quotes |

|

Table |

Click […] button and in select snowflake table to be used |

|

Schema and Edit Schema |

create and store the schema locally for this component or use already created schema in the repository. You can reuse it in various projects and job designs. |

|

Output Action |

Select the operation to insert, delete, update or merge(upsert) data in snowflake tables |

- Advance settings

|

Use custom snowflake region |

To use customize snowflake region select the checkbox and provide region id as per region used within double quotes like eu-west-1 or east-us |

|

Login Timeout |

Specify login timeout to wait for response when connecting to snowflake before returning an error |

|

Role |

Provide the snowflake role you have assigned if empty it will take public role automatically granted |

|

Tracing |

Use for taking log for snowflake JDBC driver |

|

Allow snowflake to convert column to uppercase |

Select checkbox to convert lowercase to uppercase in the defined table name. This property will not available if you select Manual query check box

|

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs |

|

NB_LINE |

Provide the number of rows proceed and returns integers |

|

NB_REJECT |

Provide the number of rows rejected and returns integers |

|

NB_SUCCESS |

Provide the number of rows Successfully proceed and returns integers |

- Usage

|

Usage Rule |

It is end component of a data flow in your job. It receives data from other component via Row > Main link and provide the error information like The name of the column in error, The number of row in error, The category of the error, such as parsing error or a conversion error, The character offset in the line in error, SQLSTATE for the error or Snowflake error code |

- tSnowflakeClose:

Use for close active snowflake connection and released the occupied resources

- Standard properties

- Basic settings

|

Database |

Select the database list and apply accordingly |

|

Connection Component |

Select the component that opens the connection you need to close from the drop-down list |

- Advance settings

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs |

- Usage

|

Usage Rule |

This component is more commonly used with other snowflake component like tSnowflakeConnection component |

- Related ELT Components for Snowflake

- tELTInput:

Use to adds as many tables as required for the SQL statement to be executed. And the standard tELTInput component belongs to the ELT family.

- Standard properties

- Basic settings

|

Schema and Edit Schema |

create and store the schema locally for this component or use already created schema in the repository. You can reuse it in various projects and job designs |

|

Default table name |

Enter default table name within double quotes |

|

Default Schema name |

Enter default schema name within double quotes |

|

Mapping |

Enter and specify the metadata file for the database to be used. The metadata mapping file is used for data type conversion between database and java |

- Advance settings

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs To fill up a field or expression with a variable, press ctrl+ space to access the variable list and choose the variable to use from it.

|

- Usage

|

Usage Rule |

This component sends schema information to tELTMap via row link Note that the output link to be used with these components must correspond strictly to the syntax of the table name.

Note: The ELT components do not handle actual data flow but only the schema information |

- tELTMap:

It is same as tMap component uses the tables provided as input to feed the parameter in the built SQL statement. The statement can include inner or outer joins to be implemented between tables or between one table and its aliases. It belongs to ELT family.

- Standard properties

- Basic settings

|

Use an existing Connection |

Select the connection component click to existing connection and use relevant connection |

|

ELT Map Editor |

The ELT Map editor allows you to define the output schema and make a graphical build of the SQL statement to be executed. The column names of schema can be different from the column names in the database. |

|

User Id and password |

Enter provided login name within double quotes and click […] button to enter password within double quotes |

|

Property type |

Either Build-In or Repository. Build-in: No property data stored centrally. Repository: select the repository file to store the properties |

|

Mapping |

create and store the schema locally for this component or use already created schema in the repository. You can reuse it in various projects and job designs. |

- Advance settings

|

Additional JDBC parameter |

Add connection properties for additional DB connection you are creating. No need to use if you have selected use an existing connection in basic settings. |

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs To fill up a field or expression with a variable, press ctrl+ space to access the variable list and choose the variable to use from it. |

- Usage

|

Usage Rule |

This component sends schema information to tELTOutput via row link Note that the output link to be used with these components must correspond strictly to the syntax of the table name.

Note: The ELT components do not handle actual data flow but only the schema information |

- tELTOutput:

Use for inserts the data according to the output schema defined in the tELTMap component including action and clauses on the table specified.

- Standard properties

- Basic settings

|

Action on Table |

Select an operation like None, Drop and create table, create table, create table if does not exists, drop table if exist and create, Truncate table as per your requirement from the dropdown menu |

|

Action on Data |

Select and perform Insert, Update and Delete operations accordingly |

|

Schema and Edit Schema |

create and store the schema locally for this component or use already created schema in the repository. You can reuse repository n various projects and job designs. |

|

Where clause (for Update and Delete only) |

Enter a clause to filter the data to be updated or deleted during the update or delete operations.

|

|

Default Table Name |

Enter default table name within double quotes |

|

Default Schema Name |

Enter default schema name within double quotes |

|

User Defined Table Name |

Select the checkbox to define a different output table name between double quotes, in the appeared Table Name field |

|

Mapping |

Enter and specify the metadata file for the database to be used. The metadata mapping file is used for data type conversion between database and java |

- Advance settings

|

Use Update Statement without Subqueries |

Select option to generate an UPDATE statement for the database. Option is available only when update is selected from the Action on data in basic settings. |

|

TStatCatcher Statistics |

This check box is for gather the job processing metadata at the job level as well as component level |

- Global variables

|

ERROR_MESSAGE |

This is the error message generated by the component when error occurs |

|

NB_LINE |

Provide the number of rows proceed and returns integer |

|

NB_LINE INSERTED |

The number of rows inserted and returns integer |

- Usage

|

Usage Rule |

This component is used with tELTMap via row link Note that the output link to be used with these components must correspond strictly to the syntax of the table name.

Note: The ELT components do not handle actual data flow but only the schema information |

- Final Thoughts:

Traditional data warehousing methods and technologies are faced with a major challenge to provide the kind of service, simplicity and value required to sustain in today’s demanding and rapidly changing business environment.

The magic combination of Snowflake and Talend –a Cloud data integration leader is not just super fun and easy to work with but is also an attractive proposition as a Cloud Data warehousing solution.

About Girikon:

Girikon is a reputed name in the Salesforce consulting, Salesforce implementation and Salesforce support space offers end-to-end IT services in order to cater to the needs of clients across different industry verticals.

+1-480-382-1320

+1-480-382-1320 +44-7428758945

+44-7428758945 +61-1300-332-888

+61-1300-332-888 +91 9811400594

+91 9811400594